Marcel Lancelle - Publications

2022

|

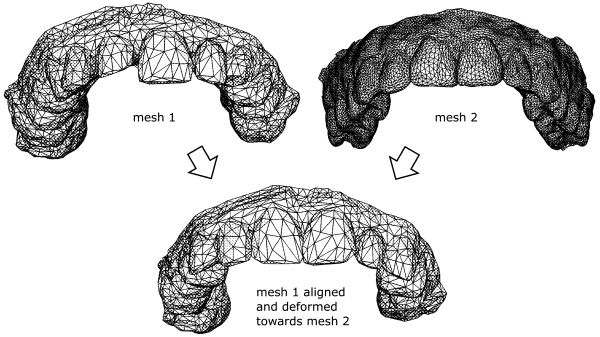

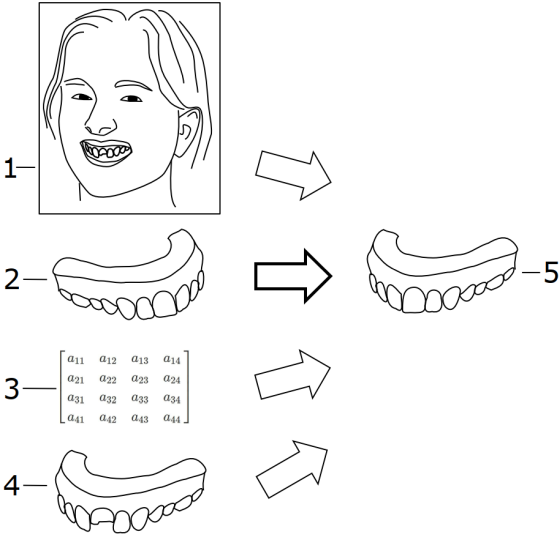

Dental model attributes transfer. Marcel Lancelle, Roland Mörzinger, Nicolas Degen, Gábor Sörös, Nemanja Bartolovic. US11244519B2 View at Google AbstractThe invention pertains to a method for transferring properties from a reference dental model (20) to a primary dental model (10), wherein the method comprises a non-rigid alignment of the primary dental model and the reference dental model, comprising applying an algorithm to minimize a measure of shape deviation between the primary dental model and the reference dental model, and transferring properties of the reference dental model to the primary dental model.

|

2021

|

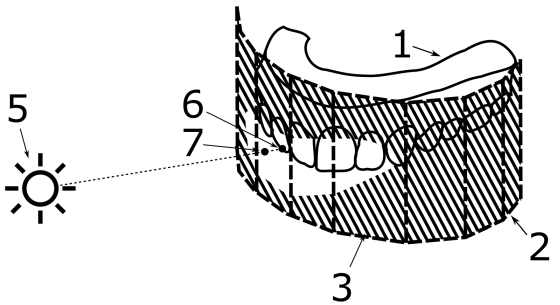

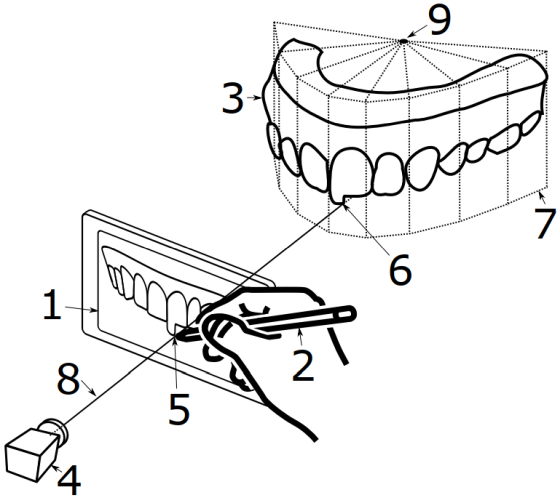

Rendering of dental models. Marcel Lancelle, Roland Mörzinger, Nicolas Degen, Gábor Sörös, Nemanja Bartolovic. US11083552B2 View at Google AbstractThe invention pertains to a method for realistic visualization of a 3D virtual dental model (1) in a 2D image (20) of a face, the method comprising estimating or assuming a lighting situation in the image of the face, determining boundaries of an inner mouth region in the image, computing, based on the boundaries of the inner mouth region, on a 3D face geometry and on the lighting situation, a shadowing in the inner mouth region, computing a lighting of the dental model (1) based at least on the computed shadowing, and visualizing the dental model (1) and the 2D image (20), wherein at least the inner mouth region in the image is overlaid with a visualization (21) of the dental model having the computed lighting.

|

|

Rendering A Dental Model In An Image. Marcel Lancelle, Roland Mörzinger, Nicolas Degen, Gábor Sörös, Nemanja Bartolovic. EP3629301B1, US11213374B2 View at Google View at Google AbstractThe present invention pertains to a computer-implemented method for visualization of virtual three-dimensional models of dentitions in an image or image stream of a person's face. In particular, the described method allows realistic, i.e. naturally looking, visualization of dental models in dental virtual mock-up applications, such as dental augmented reality applications..

|

|

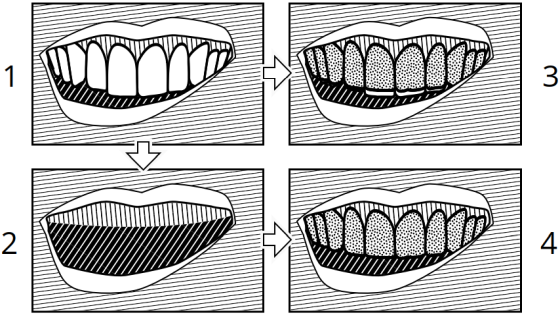

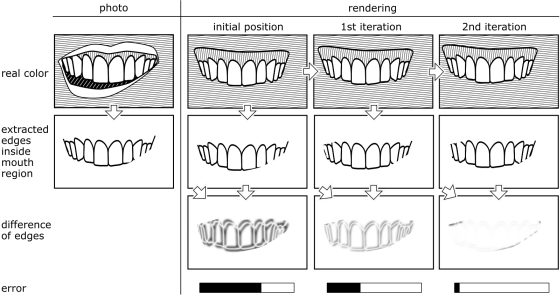

Method for aligning a three-dimensional model of a dentition of a patient to an image of the face of the patient recorded by a camera. Marcel Lancelle, Roland Mörzinger, Nicolas Degen, Gábor Sörös, Nemanja Bartolovic. EP3530232B1, US11544861B2 View at Google AbstractThe present invention relates to a computer implemented method for visualizing two-dimensional images obtained from a three-dimensional model of a dental situation in each image of the face of a patient recorded by a camera in a video sequence of subsequent images, each image including the mouth opening of the patient, wherein the three-dimensional model of dental situation is based on a three-dimensional model of the dentition of the patient and compared to the three-dimensional model of the dentition includes modifications due to dental treatment or any other dental modification.

|

|

Dental design transfer. Marcel Lancelle, Roland Mörzinger, Nicolas Degen, Gábor Sörös, Nemanja Bartolovic. US10980621B2 View at Google AbstractThe invention pertains to a method for exporting design data of a virtual 3D dental design model (12) from an AR application, the AR application being adapted to visualize the dental design model with a preliminary pose and scale (13) in an image (11) of a face comprising a mouth region with a dental situation. The invention further pertains to a method for visualizing a 3D dental design model in an AR application.

|

2020

|

Dental model attributes transfer. Marcel Lancelle, Roland Mörzinger, Nicolas Degen, Gábor Sörös, Nemanja Bartolovic. US10803675B2 View at Google AbstractThe invention pertains to a method for transferring properties from a reference dental model (20) to a primary dental model (10), wherein the method comprises a non-rigid alignment of the primary dental model and the reference dental model, comprising applying an algorithm to minimize a measure of shape deviation between the primary dental model and the reference dental model, and transferring properties of the reference dental model to the primary dental model.

|

|

Computer implemented method for modifying a digital three-dimensional model of a dentition. Marcel Lancelle, Roland Mörzinger, Nicolas Degen, Gábor Sörös, Nemanja Bartolovic. US10779917B2, US11344392B2 View at Google Abstract

The present invention relates to a computer implemented method for modifying a digital three-dimensional model (3) of a dentition comprising:

|

2019

|

Controlling Motion Blur in Synthetic Long Time Exposures. Marcel Lancelle, Pelin Dogan, Markus Gross. Eurographics 2019. View at Eurographics Digital Library (with supplemental material) Download author's version (21 MB), supplemental material (289 MB) AbstractIn a photo, motion blur can be used as an artistic style to convey motion and to direct attention. In panning or tracking shots, a

moving object of interest is followed by the camera during a relatively long exposure. The goal is to get a blurred background

while keeping the object sharp. Unfortunately, it can be difficult to impossible to precisely follow the object. Often, many

attempts or specialized physical setups are needed. BibTeX

@inproceedings {2019_Lancelle_MotionBlur,

author = "Lancelle, Marcel and Dogan, Pelin and Gross, Markus",

title = "Controlling Motion Blur in Synthetic Long Time Exposures",

booktitle = "Computer Graphics Forum (Proceedings of Eurographics) ",

volume = "38",

year = "2019",

publisher = "John Wiley \& Sons",

organization = "Eurographics",

}

|

|

Reliability of a three-dimensional facial camera for dental and medical applications: a pilot study. Shiming Liu, Murali Srinivasan, Roland Mörzinger, Marcel Lancelle, Thabo Beeler, Markus Gross, Barbara Solenthaler, Vincent Fehmer, Irena Sailer. The Journal of Prosthetic Dentistry. View at the JPD AbstractSTATEMENT OF PROBLEM: Three-dimensional visualization for pretreatment diagnostics and treatment planning is necessary for surgical and prosthetic rehabilitations. The reliability of a novel 3D facial camera is unclear. BibTeX

@inproceedings {2018_Liu_Reliability3DDentalCam,

author = "Liu, Shiming and Srinivasan, Murali and M{\"o}rzinger, Roland and Lancelle, Marcel and Beeler, Thabo and Gross, Markus and Solenthaler, Barbara and Fehmer, Vincent and Sailer, Irena",

title = "Reliability of a three-dimensional facial camera for dental and medical applications: a pilot study",

booktitle = "Journal of Prosthetic Dentistry",

year = "2019",

month = {10},

publisher = "Elsevier",

doi = "10.1016/j.prosdent.2018.10.016"

}

|

2018

|

Comparison of user satisfaction and image quality of fixed and mobile camera systems for 3-dimensional image capture of edentulous patients: A pilot clinical study. Irena Sailer, Shiming Liu, Roland Mörzinger, Marcel Lancelle, Thabo Beeler, Markus Gross, Chenglei Wu, Vincent Fehmer, Murali Srinivasan. The Journal of Prosthetic Dentistry. View at PubMed AbstractSTATEMENT OF PROBLEM: An evaluation of user satisfaction and image quality of a novel handheld purpose-built mobile camera system for 3-dimensional (3D) facial acquisition is lacking. BibTeX

@inproceedings {2018_Sailer_3DimImgCaptureEdentulous,

author = "Sailer, Irena and Liu, Shiming and M{\"o}rzinger, Roland and Lancelle, Marcel and Beeler, Thabo and Gross, Markus and Wu, Chenglei and Fehmer, Vincent and Srinivasan, Murali",

title = "Comparison of user satisfaction and image quality of fixed and mobile camera systems for 3-dimensional image capture of edentulous patients - A pilot clinical study",

booktitle = "Journal of Prosthetic Dentistry",

year = "2018",

month = "10",

publisher = "Elsevier",

DOI = {10.1016/j.prosdent.2018.04.005}

}

|

2017

|

Computational Light Painting Using a Virtual Exposure. Nestor Salamon, Marcel Lancelle, Elmar Eisemann. Eurographics 2017. View at Eurographics Digital Library (with video) Download author's version (5.7 MB) AbstractLight painting is an artform where a light source is moved during a long-exposure shot, creating trails resembling a stroke on a canvas. It is very difficult to perform because the light source needs to be moved at the intended speed and along a precise trajectory. Additionally, images can be corrupted by the person moving the light. We propose computational light painting, which avoids such artifacts and is easy to use. Taking a video of the moving light as input, a virtual exposure allows us to draw the intended light positions in a post-process. We support animation, as well as 3D light sculpting, with high-quality results. BibTeX

@inproceedings {2017_Salamon_LightPainting,

author = "Salamon, Nestor and Lancelle, Marcel and Eisemann, Elmar",

title = "Computational Light Painting Using a Virtual Exposure",

booktitle = "Computer Graphics Forum (Proceedings of Eurographics) ",

volume = "36",

year = "2017",

publisher = "John Wiley \& Sons",

organization = "Eurographics",

DOI = {10.1111/cgf.13101}

}

|

2016

|

Mobile AR for Dentistry - Virtual Try-On in Live 3D. Demo. Gábor Sörös, Marcel Lancelle, Roland Mörzinger and Nicolas Degen. European Conference on Computer Vision (ECCV 2016). AbstractDigital technologies have the potential to disrupt many traditional industries including dentistry. Software becomes the main communication technology and a useful tool for showing patients the possibilities for enhancing their smiles. Despite the need for processing the visual content, today's solutions do not correspond to the state of the art in computer vision. BibTeX

@inproceedings{2016_Soeroes_DentalVirtualTryOn,

author = {S{\"o}r{\"o}s, G{\'a}bor and Lancelle, Marcel and M{\"o}rzinger, Roland and Degen, Nicolas},

title = {Mobile AR for Dentistry - Virtual Try-On in Live 3D},

booktitle = {European Conference on Computer Vision (ECCV 2016)},

year = {2016},

location = {Amsterdam, The Netherlands}

}

|

|

Anaglyph Caustics with Motion Parallax. Marcel Lancelle, Tobias Martin, Barbara Solenthaler and Markus Gross. In Pacific Graphics 2016. View at CGL Download author's version (3.4 MB) AbstractIn this paper we present a method to model and simulate a lens such that its caustic reveals a stereoscopic 3D image when viewed through anaglyph glasses. By interpreting lens dispersion as stereoscopic disparity, our method optimizes the shape and arrangement of prisms constituting the lens, such that the resulting anaglyph caustic corresponds to a given input image defined by intensities and disparities. In addition, a slight change of the lens distance to the screen causes a 3D parallax effect that can also be perceived without glasses. Our proposed relaxation method carefully balances the resulting pixel intensity and disparity error, while taking the subsequent physical fabrication process into account. We demonstrate our method on a representative set of input images and evaluate the anaglyph caustics using multi-spectral photon tracing. We further show the fabrication of prototype lenses with a laser cutter as a proof of concept. BibTeX

@inproceedings{2016_Lancelle_AnaglyphCaustics,

author = {Lancelle, Marcel and Martin, Tobias and Solenthaler, Barbara and Gross, Markus},

title = {Anaglyph Caustics with Motion Parallax},

booktitle = {Computer Graphics Forum},

series = {Pacific Graphics},

year = {2016},

location = {Okinawa, Japan},

publisher = {Eurographics},

keywords = {caustic, anaglyph stereo, lens},

}

|

|

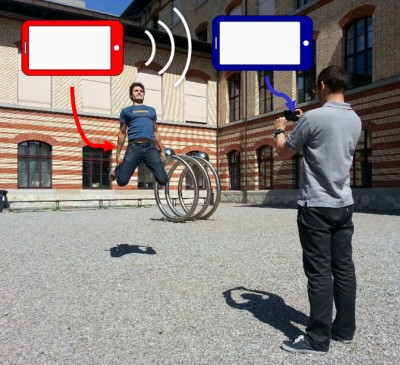

Motion Based Remote Camera Control with Mobile Devices. Sabir Akhadov, Marcel Lancelle, Jean-Charles Bazin and Markus Gross. In Proceedings of Mobile HCI 2016. View at CGL Download author's version (2.8 MB) AbstractWe investigate the influence of motion effects in the domain of mobile Augmented Reality (AR) games on user experience and task performance. The work focuses on evaluating responses to a selection of synthesized camera oriented reality mixing techniques for AR, such as motion blur, defocus blur, latency and lighting responsiveness. In our cross section of experiments, we observe that these measures have a significant impact on perceived realism, where aesthetic quality is valued. However, lower latency records the strongest correlation with improved subjective enjoyment, satisfaction, and realism, and objective scoring performance. We conclude that the reality mixing techniques employed are not significant in the overall user experience of a mobile AR game, except where harmonious or convincing blended AR image quality is consciously desired by the participants. BibTeX

@inproceedings{2016_Akhadov_RemoteCameraControl,

author = {Akhadov, Sabir and Lancelle, Marcel and Bazin, Jean-Charles and Gross, Markus},

title = {Motion Based Remote Camera Control with Mobile Devices},

booktitle = {Proceedings of the 18th International Conference on Human-computer Interaction with Mobile Devices and Services},

series = {Mobile HCI 2016},

year = {2016},

location = {Florence, Italy},

publisher = {ACM},

address = {New York, NY, USA},

keywords = {camera trigger, remote control, motion sensor, jump photo, video speed ramping}

}

|

|

Gnome Trader: A Location-Based Augmented Reality Trading Game. Fabio Zünd, Miriam Tschanen, Marcel Lancelle, Maria Olivares, Mattia Ryffel, Alessia Marra, Milan Bombsch, Markus Gross, Robert W. Sumner. 9th International Conference on Game and Entertainment Technologies. View at CGL Download author's version (0.9 MB) AbstractWe explore a location-based game concept that encourages real-world interactions and gamifies daily commuting activities. Enhanced with augmented reality technology, we create an immersive, pervasive trading game called Gnome Trader, where the player engages with the game by physically traveling to predefined locations in the city and trading resources with virtual gnomes. As the virtual market is a crucial component of the game, we take special care to analyze various economic game mechanics. We explore the parameter space of different economic models using a simulation of the virtual economy. We evaluate the overall gameplay as well as the technical functionality through several play tests. BibTeX

@inproceedings{2016_Zuend_GnomeTrader,

author = {Z{\"{u}}nd, Fabio and Tschanen, Miriam and Lancelle, Marcel and Olivares, Maria and Ryffel, Mattia and Marra, Alessia and Bombsch, Milan and Gross, Markus and Sumner, Robert W.},

booktitle = {9th International Conference on Game and Entertainment Technologies (GET)},

title = {{Gnome Trader: A Location-Based Augmented Reality Trading Game}},

year = {2016},

keywords = {location-based gaming, augmented reality, virtual economy}

}

|

2014

|

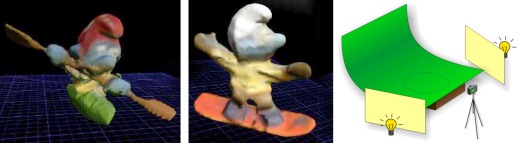

Influence of Animated Reality Mixing Techniques on User Experience. Fabio Zünd, Marcel Lancelle, Mattia Ryffel, Robert W. Sumner, Kenneth Mitchell and Markus Gross. In Proceedings of Motion in Games (MIG) 2014. View at CGL View at ACM Download author's version (8.4 MB) Abstract AbstractWe investigate the influence of motion effects in the domain of mobile Augmented Reality (AR) games on user experience and task performance. The work focuses on evaluating responses to a selection of synthesized camera oriented reality mixing techniques for AR, such as motion blur, defocus blur, latency and lighting responsiveness. In our cross section of experiments, we observe that these measures have a significant impact on perceived realism, where aesthetic quality is valued. However, lower latency records the strongest correlation with improved subjective enjoyment, satisfaction, and realism, and objective scoring performance. We conclude that the reality mixing techniques employed are not significant in the overall user experience of a mobile AR game, except where harmonious or convincing blended AR image quality is consciously desired by the participants. BibTeX

@inproceedings{2014_Zuend_ARMixerUserExperience,

author = {Z{\"u}nd, Fabio and Lancelle, Marcel Ryffel, Mattia and Sumner, Robert W. and Mitchell, Kenneth and Gross, Markus},

title = {Influence of {A}nimated {R}eality {M}ixing {T}echniques on {U}ser {E}xperience},

journal = {$7^{th}$ International ACM SIGGRAPH Conference on Motion in Games (MIG)},

year = {2014}

}

|

|

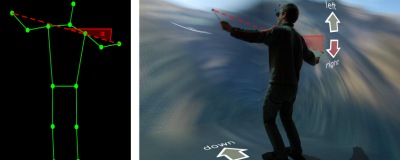

Automatic Jumping Photos on Smartphones. Cecilia Garcia, Jean-Charles Bazin, Marcel Lancelle, Markus Gross. In Proceedings of 21st IEEE International Conference on Image Processing (ICIP) 2014. View at CGL View at IEEE Download author's version (2.2 MB) Abstract

Jumping photos are very popular, particularly in the contexts of holidays, social events and entertainment. However, triggering the camera at the right time to take a visually appealing jumping photo is quite difficult in practice, especially for casual photographers or self-portraits. We propose a fully automatic method that solves this practical problem. By analyzing the ongoing jump motion online at a fast rate, our method predicts the time at which the jumping person will reach the highest point and takes trigger delays into account to compute when the camera has to be triggered. Since smartphones are more and more popular, we focus on these devices which leads to some challenges such as limited computational power and data transfer rates. We developed an Android app for smartphones and used it to conduct experiments confirming the validity of our approach. BibTeX

@inproceedings{2014_Garcia_JumpingPhotos,

author = {Garcia, Cecilia and Bazin, Jean-Charles and Lancelle, Marcel and Gross, Markus},

title = {Automatic {J}umping {P}hotos on {S}martphones},

journal = {Proceedings of $21^{st}$ IEEE International Conference on Image Processing (ICIP)},

year = {2014}

}

|

|

An ecosystem for interactive mixed-reality applications on the web. Torsten Spieldenner, Kristian Sons, Marcel Lancelle, Fabio Zünd and Kenny Mitchell. ACM Web3D 2014, tutorial. AbstractWe give an overview on the integrated, modular toolset and basic building blocks available to achieve interactive 3D experiences on the web in the context of augmented-reality and mixed-reality applications. BibTeX

@misc{2014_Spieldenner_MixedRealityWeb,

author = {Spieldenner, Torsten and Sons, Kristian and Lancelle, Marcel and Zünd, Fabio and Mitchell, Kenny},

title = {An ecosystem for interactive mixed-reality applications on the web},

journal = {ACM Web3D 2014 - Tutorial},

year = {2014}

}

|

2013

|

Thinking Penguin: Multi-modal Brain-Computer Interface Control of a VR Game. Robert Leeb, Marcel Lancelle, Vera Kaiser, Dieter W. Fellner and Gert Pfurtscheller. In IEEE Transactions on Computational Intelligence and AI in Games. View at IEEE AbstractWe describe a multi-modal brain-computer interface (BCI) experiment, situated in a highly immersive CAVE. A subject sitting in the virtual environment controls the main character of a virtual reality game: a penguin that slides down a snowy mountain slope. While the subject can trigger a jump action via the BCI, additional steering with a game controller as a secondary task was tested. Our experiment profits from the game as an attractive task where the subject is motivated to get a higher score with a better BCI performance. A BCI based on the so-called brain-switch was applied, which allows discrete asynchronous actions. Fourteen subjects participated, of which 50% achieved the required performance to test the penguin game. Comparing the BCI performance during the training and the game showed that a transfer of skills is possible, in spite of the changes in visual complexity and task demand. Finally and most importantly, our results showed that the use of a secondary motor task, in our case the joystick control, did not deteriorate the BCI performance during the game. Through these findings, we conclude that our chosen approach is a suitable multi-modal or hybrid BCI implementation, in which the user can even perform other tasks in parallel. BibTeX

@inproceedings{2013_Leeb_ThinkingPenguin,

author = {Leeb, R. and Lancelle, M. and Kaiser, V. and Fellner, D.W. and Pfurtscheller, G.},

title = {Thinking {P}enguin: {M}ulti-modal {B}rain-{C}omputer {I}nterface {C}ontrol of a {V}{R} {G}ame},

journal = {Computational Intelligence and AI in Games, IEEE Transactions on},

volume = {PP},

number = {99},

keywords = {Brain-Computer Interfaces (BCI);brain-switch;game;hybrid BCI;multi-modal;multi-tasking;virtual reality (VR)},

doi = {10.1109/TCIAIG.2013.2242072},

ISSN = {1943-068X}

}

|

2012

|

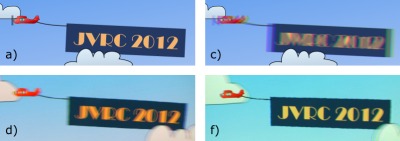

Fast Motion Rendering for Single-Chip Stereo DLP Projectors. Marcel Lancelle, Gerrit Voß and Dieter W. Fellner. In 18th Eurographics Symposium on Virtual Environments, Madrid, Spain (EGVE 2012). View at EG digital library AbstractSingle-chip color DLP projectors show the red, green and blue components one after another. When the gaze

moves relative to the displayed pixels, color fringes are perceived. In order to reduce these artefacts, many devices

show the same input image twice at the double rate, i.e. a 60Hz source image is displayed with 120Hz.

Consumer stereo projectors usually work with time interlaced stereo, allowing to address each of these two images

individually. We use this so called 3D mode for mono image display of fast moving objects. Additionally, we

generate a separate image for each individual color, taking the display time offset of each color component into

account. With these 360 images per second we can strongly reduce ghosting, color fringes and jitter artefacts on

fast moving objects tracked by the eye, resulting in sharp objects with smooth motion. BibTeX

@inproceedings{2012_Lancelle_FastMotionProjection,

author = {Lancelle, Marcel and Vo{\ss}, Gerrit and Fellner, Dieter W.},

title = {Fast Motion Rendering for Single-Chip Stereo DLP Projectors},

year = 2012,

pages = {29-36},

URL = {http://diglib.eg.org/EG/DL/WS/EGVE/JVRC12/029-036.pdf},

DOI = {10.2312/EGVE/JVRC12/029-036},

editor = {Ronan Boulic and Carolina Cruz-Neira and Kiyoshi Kiyokawa and David Roberts},

title = {Joint Virtual Reality Conference of ICAT - EGVE - EuroVR},

isbn = {978-3-905674-40-8},

issn = {1727-530X},

address = {Madrid, Spain},

publisher = {Eurographics Association}

}

|

|

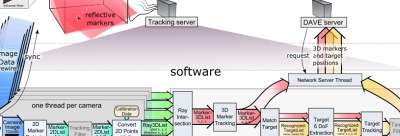

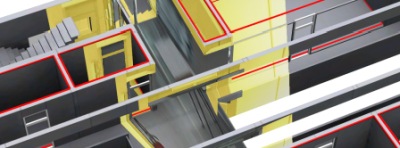

Hands-Free Navigation in Immersive Environments for the Evaluation of the Effectiveness of Indoor Navigation Systems. Volker Settgast, Marcel Lancelle, Dietmar Bauer and Dieter Fellner. In 9. Workshop Virtuelle und Erweiterte Realität 2012, Düsseldorf, Germany (VRAR 2012). Download PDF (0.5 MB) AbstractWhile navigation systems for cars are in widespread use, only recently, indoor navigation systems based on smartphone apps became technically feasible. Hence tools in order to plan and evaluate particular designs of information provision are needed. Since tests in real infrastructures are costly and environmental conditions cannot be held constant, one must resort to virtual infrastructures. In this paper we present a hands-free navigation in such virtual worlds using the Microsoft Kinect in our four-sided Definitely Affordable Virtual Environment (DAVE). We designed and implemented navigation controls using the user's gestures and postures as the input to the controls. The installation of expensive and bulky hardware like treadmills is avoided while still giving the user a good impression of the distance she has travelled in virtual space. An advantage in comparison to approaches using head mounted augmented reality is that the DAVE allows the users to interact with their smartphone. Thus the effects of different indoor navigation systems can be evaluated already in the planning phase using the resulting system. BibTeX

@inproceedings{2012_Lancelle_HandsFreeNavigationKinect,

author = {Settgast, Volker and Lancelle, Marcel and Bauer, Dietmar and Fellner, Dieter W.},

title = {Hands-Free Navigation in Immersive Environments for the Evaluation of the Effectiveness of Indoor Navigation Systems},

year = 2012,

booktitle = {$9^{th}$ Workshop "Virtuelle \& Erweiterte Realität" (VR/AR)},

note = {Paper}

}

|

2011

|

Visual Computing in Virtual Environments. Marcel Lancelle, Sven Havemann, Dieter W. Fellner. PhD thesis, Graz University of Technology. Download low resolution PDF (6.3 MB) Download medium resolution PDF (46 MB) AbstractThis thesis covers research on new and alternative ways of interaction

with computers. Virtual Reality and multi touch setups

are discussed with a focus on three dimensional rendering

and photographic applications in the field of Computer Graphics.

Virtual Reality (VR) and Virtual Environments (VE) were once thought

to be the future interface to computers. However, a lot of problems

prevent an everyday use. This work shows solutions to some of the

problems and discusses remaining issues. BibTeX

@phdthesis{2011_Lancelle_VisualComputingInVirtualEnvironments,

author = {Lancelle, Marcel and Fellner, Dieter W.},

title = {Visual Computing in Virtual Environments},

year = 2011,

school = {Graz University of Technology},

note = {PhD thesis}

}

|

|

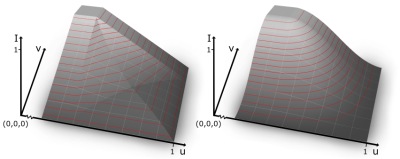

Smooth Transitions for Large Scale Changes in Multi-Resolution Images. Marcel Lancelle, Dieter W. Fellner. In 16th International Workshop on Vision, Modeling, and Visualization (VMV 2011). Download PDF (2.5 MB) View at Eurographics Digital Library, AbstractToday's super zoom cameras offer a large optical zoom range of over 30x. It is easy to take a wide angle photograph of the scene together with a few zoomed in high resolution crops. Only little work has been done to appropriately display the high resolution photo as an inset. Usually, to hide the resolution transition, alpha blending is used. Visible transition boundaries or ghosting artifacts may result. In this paper we introduce a different, novel approach to overcome these problems. Across the transition, we gradually attenuate the maximum image frequency. We achieve this with a Gaussian blur with an exponentially increasing standard deviation. BibTeX

@inproceedings{2011_Lancelle_SmoothTransitionsInMultiResolutionImages,

author = {Lancelle, Marcel and Fellner, Dieter W.},

title = {Smooth Transitions for Large Scale Changes in Multi-Resolution Images},

year = 2011,

booktitle = {16$^{th}$ International Workshop on Vision, Modeling, and Visualization (VMV)},

note = {Paper},

doi = {10.2312/PE/VMV/VMV11/081-087}

}

|

|

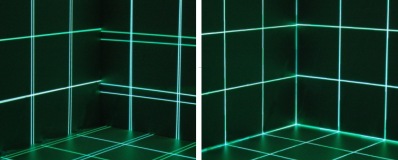

Soft Edge and Soft Corner Blending. Marcel Lancelle, Dieter W. Fellner. In Workshop "Virtuelle & Erweiterte Realität" (VR/AR 2011). Download PDF (4.3 MB) AbstractWe address artifacts at corners in soft edge blend masks for tiled projector arrays. We compare existing and novel modifications of the commonly used weighting function and analyze the first order discontinuities of the resulting blend masks. In practice, e.g. when the projector lamps are not equally bright or with rear projection screens, these discontinuities may lead to visible artifacts. By using first order continuous weighting functions, we achieve significantly smoother results compared to commonly used blend masks. BibTeX

@inproceedings{2011_Lancelle_SoftCornerBlending,

author = {Lancelle, Marcel and Fellner, Dieter W.},

title = {Soft Edge and Soft Corner Blending},

year = 2011,

booktitle = {Workshop "Virtuelle \& Erweiterte Realität" (VR/AR)},

note = {Paper}

}

|

|

Next-Generation 3D Visualization for Visual Surveillance. Peter Roth, Volker Settgast, Peter Widhalm, Marcel Lancelle, Josef Birchbauer, Norbert Brändle, Sven Havemann, Horst Bischof. 8th IEEE International Conference on Advanced Video and Signal-Based Surveillance (AVSS 2011). View at IEEE Digital Library AbstractExisting visual surveillance systems typically require that human operators observe video streams from different cameras, which becomes infeasible if the number of observed cameras is ever increasing. In this paper, we present a new surveillance system that combines automatic video analysis (i.e., single person tracking and crowd analysis) and interactive visualization. Our novel visualization takes advantage of a high resolution display and given 3D information to focus the operator's attention to interesting/critical areas of the observed area. This is realized by embedding the results of automatic scene analysis techniques into the visualization. By providing different visualization modes, the user can easily switch between the different modes and can select the mode which provides most information. The system is demonstrated for a real setup on a university campus. BibTeX

@inproceedings{2011_Roth_AUTOVISTA,

author = {Roth, Peter M. and Settgast, Volker and Widhalm, Peter and Lancelle, Marcel and Birchbauer, Josef and Br\"{a}ndle, Norbert and Havemann, Sven and Bischof, Horst},

title = {Next-Generation 3{D} Visualization for Visual Surveillance},

year = 2011,

booktitle = {$8^{th}$ IEEE International Conference on Advanced Video and Signal-Based Surveillance (AVSS)},

note = {Poster}

}

|

2010

|

Cortical Correlate of Spatial Presence in 2-D and 3-D Interactive Virtual Reality: An EEG Study. Silvia Kober, Marcel Lancelle, Dieter W. Fellner and Christa Neuper. RAVE-10, Barcelona, Spain. Talk. Download abstract (PDF, 0.1 MB) BibTeX

@inproceedings{2010_Kober_Navigation,

author = {Kober, Silvia and Lancelle, Marcel and Fellner, Dieter W. and Neuper, Christa},

title = {Cortical correlate of spatial presence in 2-{D} and 3-{D} interactive virtual reality: An {EEG} study},

year = 2010,

booktitle = {RAVE-10, Barcelona, Spain},

note = {Talk}

}

|

2009

|

Spatially Coherent Visualization of Image Detection Results using Video Textures. Volker Settgast, Marcel Lancelle, Sven Havemann and Dieter W. Fellner. In 33rd Workshop of the Austrian Association for Pattern Recognition (AAPR/ÖAGM 2009). Download PDF (8.0 MB) AbstractCamera-based object detection and tracking are image processing tasks that typically do not take 3D information into account. Spatial relations, however, are sometimes crucial to judge the correctness or importance of detection and tracking results. Especially in applications with a large number of image processing tasks running in parallel, traditional methods of presenting detection results do not scale. In such cases it can be very useful to transform the detection results back into their common 3D space. We present a computer graphics system that is capable of showing a large number of detection results in real-time, using different levels of abstraction, on various hardware configurations. As example application we demonstrate our system with a surveillance task involving eight cameras. BibTeX

@inproceedings{2009_Lancelle_SpatiallyCoherentVisualization,

author = {Settgast, Volker and Lancelle, Marcel and Havemann, Sven and Fellner, Dieter W.},

title = {Spatially Coherent Visualization of Image Detection Results using Video Textures.},

year = 2009,

booktitle = {$33^{rd}$ Workshop of the Austrian Association for Pattern Recognition (AAPR/OAGM)}

}

|

2008

|

Definitely Affordable Virtual Enviroment. Marcel Lancelle, Volker Settgast and Dieter W. Fellner. In IEEE Virtual Reality Conference (2008). Download description as PDF (0.4 MB) IntroductionThe DAVE is an immersive projection environment, a foursided CAVE. DAVE stands for 'definitely affordable virtual environment'. 'Affordable' means that by mostly using standard hardware components we can greatly reduce costs compared to other commercial systems. We show the hardware setup and some applications in the accompaning video. In 2005 we buildt a new version of our DAVE at the University of Technology in Graz, Austria. Room restrictions motivated a new compact design to optimally use the available space. The back projection material with a custom shape is streched to the wooden frame to provide a flat surface without ripples. BibTeX

@inproceedings{2008_Lancelle_DAVEVideo,

author = {Lancelle, Marcel and Settgast, Volker and Fellner, Dieter W.},

title = {{D}efinitely {A}ffordable {V}irtual {E}nvironment},

year = 2008,

booktitle = {IEEE Virtual Reality Conference},

note = {Video}

}

|

|

Intuitive Navigation in Virtual Environments. Marc Steiner, Philipp Reiter, Christian Ofenböck, Volker Settgast, Torsten Ullrich, Marcel Lancelle and Dieter W. Fellner. In 14th Eurographics Symposium on Virtual Environments, Eindhoven, Netherlands (EGVE 2008). Poster. View at EG digital library, download PDF from there (0.5 MB) AbstractWe present several novel ways of interaction and navigation in virtual worlds. Using the optical tracking system of our four-sided Definitely Affordable Virtual Environment (DAVE), we designed and implemented navigation and movement controls using the user's gestures and postures. Our techniques are more natural and intuitive than a standard 3D joystick-based approach, which compromises the immersion's impact. BibTeX

@inproceedings{2008_Steiner_IntuitiveNavigation,

author = {Steiner, Marc and Reiter, Philipp and Ofenb\"{o}ck, Christian and Settgast, Volker and Ullrich, Torsten and Lancelle, Marcel and Fellner, Dieter W.},

title = {Intuitive Navigation in Virtual Environments},

year = 2008,

booktitle = {$14^{th}$ Eurographics Symposium on Virtual Environments, Eindhoven, Netherlands},

note = {Poster}

}

|

2007

|

3D-Powerpoint - Towards a design tool for digital exhibitions of cultural artifacts. Sven Havemann, Volker Settgast, Marcel Lancelle and Dieter W. Fellner. In proceedings of International Symposium on Virtual Reality, Archaeology and Cultural Heritage (VAST 2007). View at EG digital library, download PDF from there (0.5 MB) AbstractWe describe first steps towards a suite of tools for CH professionals to set up and run digital exhibitions of

cultural 3D artifacts in museums. Both the authoring and the presentation views shall finally be as easy to use as,

e.g., Microsoft Powerpoint. But instead of separated slides our tool uses pre-defined 3D scenes, called "layouts",

containing geometric objects acting as placeholders, called "drop targets". They can be replaced quite easily, in

a drag-and-drop fashion, by digitized 3D models, and also by text and images, to customize and adapt a digital

exhibition to the style of the real museum. Furthermore, the tool set contains easy-to-use tools for the rapid 3D

modeling of simple geometry and for the alignment of given models to a common coordinate system. BibTeX

@inproceedings{2007_Havemann_3DPowerpoint,

author = {Havemann, Sven and Settgast, Volker and Lancelle, Marcel and Fellner, Dieter W.},

title = {3{D}-Powerpoint - Towards a design tool for digital exhibitions of cultural artifacts},

year = 2007,

booktitle = {VAST},

}

|

2006

|

Minimally invasive projector calibration for 3D applications. Marcel Lancelle, Lars Offen, Torsten Ullrich and Dieter W. Fellner. In Dritter Workshop Virtuelle und Erweiterte Realität der GI-Fachgruppe VR/AR (VRAR 2006). Download PDF (0.9 MB) Abstract AbstractAddressing the typically time consuming adjustment of projector equipment in VR installations we propose an easy to implement projector calibration method that effectively corrects images projected onto planar surfaces and which does not require any additional hardware. For hardware accelerated 3D applications only the projection matrix has to be modified slightly thus there is no performance impact and existing applications can be adopted easily. BibTeX

@inproceedings{2006_Lancelle_ProjectorCalibration,

author = {Lancelle, Marcel and Offen, Lars and Ullrich, Torsten and Fellner, Dieter W.},

title = {Minimally Invasive Projector Calibration for 3{D} Applications},

year = 2006,

booktitle = {Dritter Workshop Virtuelle und Erweiterte Realität der GI-Fachgruppe VR/AR},

}

|

2005

|

Mining Data 3D Visualization and Virtual Reality: Enhancing the Interface of LeapFrog3D. Raphael Grasset, Marcel Lancelle and Mark Billinghurst. Technical report, HITLabNZ 2005. Download PDF (0.9 MB) Introduction

Leapfrog 3D is 3D visualization software for geological modeling focusing on the mining domain. The tool allows users to filter, analyze

and visualize different types of underground measurements (drill hole, seismic data, etc.). The software provides a much faster way of

generating possible raw grade morphological models compared to the long explicit modeling sessions needed with current classical tools.

By using implicit modeling and a proprietary interpolating algorithm (fastRBFTM), geologists can simply introduce an

interpreted morpholocical model, and the software will converge rapidly to a new meshing solution (Figure 1). BibTeX

@techreport{2005_Grasset_EnhancingTheInterfaceOfLeapFrog3D,

author = "Grasset, Raphael and Lancelle, Marcel and Billinghurst, Mark",

title = "{M}ining {D}ata 3{D} {V}isualization and {V}irtual {R}eality: {E}nhancing the {I}nterface of {L}eap{F}rog3{D}",

year = "2005",

institution = {HITLabNZ},

note = {Technical report}

}

|

2004

|

Large public interactive displays - Overview. Marcel Lancelle and Mark Billinghurst. HITLabNZ, 2004. Technical report. Download PDF (0.2 MB) AbstractMany different projects exist that are more or less related to large public interactive displays. Most of them exist for research purposes. The following text describes different issues, ideas and existing solutions. BibTeX

@techreport{2004_Lancelle_InteractiveLargeScreenDisplays,

author = {Lancelle, Marcel and Billinghurst, Mark},

title = {Public Interactive Large Screen Displays},

year = 2004,

institution = {HITLabNZ},

note = {Technical report}

}

|

|

Current issues on 3D city models. Marcel Lancelle, Dieter W. Fellner. In Image and Vision Computing New Zealand (2004). Poster. Download PDF (2.5 MB) AbstractThis research covers issues of automatic generation and visualization of a 3D city

model using existing digital data. Air photos, LIDAR point clouds and soil usage and

cadastral maps are used as data sources for the automated data fusion in a sample

implementation. Combining existing techniques and new ideas, the developed framework

enables automatic information enrichment and generation of plausible details. BibTeX

@inproceedings{2004_Lancelle_CurrentIssuesOn3DCityModels,

author = {Lancelle, Marcel and Fellner, Dieter W.},

title = {Current issues on 3{D} city models},

year = 2004,

booktitle = {Image and Vision Computing New Zealand},

note = {Poster}

}

|

|

Automatische Generierung und Visualisierung von 3D-Stadtmodellen. (in German) Marcel Lancelle, Dieter W. Fellner. Master’s thesis, Technische Universität Braunschweig, 2004. Download PDF (4.3 MB) Abstract

Das Thema der 3D-Stadtmodelle ist sehr vielfältig und interdisziplinär. Dieses

Kapitel gibt einen Überblick über den behandelten Stoff und die Ziele dieser

Diplomarbeit. BibTeX

@mastersthesis{2004_Lancelle_3DStadtmodelle,

author = {Lancelle, Marcel and Fellner, Dieter W.},

title = {{A}utomatische {G}enerierung und {V}isualisierung von 3D-{S}tadtmodellen},

year = 2004,

school = {Technische Universit\"{a}t Braunschweig},

note = {Master's thesis}

}

|

2003

|

Silhouettenbasiertes 3D-Scannen. (in German) Marcel Lancelle, Sven Havemann. Bachelor's thesis, Technische Universität Braunschweig, 2003. Download PDF (0.4 MB) Einleitung

Die Erstellung von 3D Modellen ist ein wichtiger

Schritt in der Computergraphik. Mit heutigen

Modellierungswerkzeugen ist dies für manche

Objekte einfach zu erfüllen, doch gibt es auch viele

Objekte, bei denen eine Modellierung sehr aufwendig wäre. 3D-Scanner helfen hier weiter, sofern

das Objekt schon existiert und verfügbar ist. Bei jedem der vielen Verfahren des 3D Scannens gibt es

Einschränkungen bezüglich der Größe, Form, Konsistenz oder anderen

Materialeigenschaften des Objektes. Doch das 3D-Scannen wird häufig einfach aus

finanziellen Gründen nicht eingesetzt. BibTeX

@misc{2003_Lancelle_3DScanner,

author = {Lancelle, Marcel and Havemann, Sven},

title = {{S}ilhouettenbasiertes 3{D}-{S}cannen},

year = 2003,

school = {Technische Universit\"{a}t Braunschweig},

note = {Bachelor's thesis}

}

|

©2003-2021 Marcel Lancelle